LangChain

Founded Year

2022Stage

Series A | AliveTotal Raised

$35MLast Raised

$25M | 1 yr agoMosaic Score The Mosaic Score is an algorithm that measures the overall financial health and market potential of private companies.

-76 points in the past 30 days

About LangChain

LangChain specializes in the development of large language model (LLM) applications and provides a suite of products that support developers throughout the application lifecycle. It offers a framework for building context-aware, reasoning applications, tools for debugging, testing, and monitoring application performance, and solutions for deploying application programming interfaces (APIs) with ease. It was founded in 2022 and is based in San Francisco, California.

Loading...

ESPs containing LangChain

The ESP matrix leverages data and analyst insight to identify and rank leading companies in a given technology landscape.

The large language model (LLM) application development market provides frameworks, tools, and platforms for building, customizing, and deploying applications powered by pre-trained language models. Companies in this market offer solutions for fine-tuning models on domain-specific data, creating prompt engineering workflows, developing retrieval-augmented generation systems, and orchestrating LLM-p…

LangChain named as Outperformer among 15 other companies, including Cohere, Weights & Biases, and Anthropic.

Loading...

Research containing LangChain

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned LangChain in 7 CB Insights research briefs, most recently on May 16, 2025.

May 16, 2025 report

Book of Scouting Reports: 2025’s AI 100

Apr 24, 2025 report

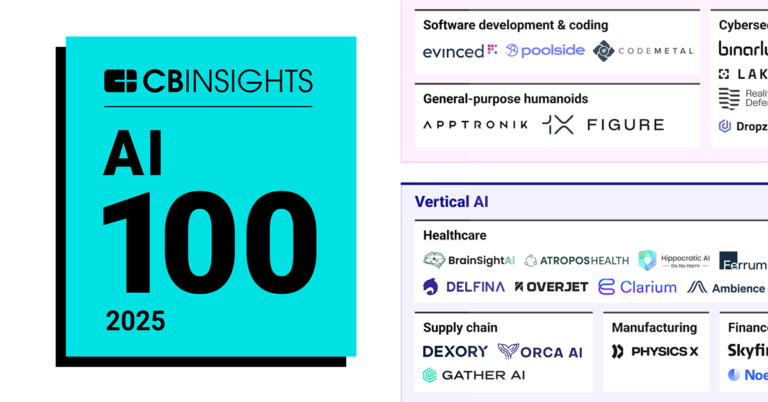

AI 100: The most promising artificial intelligence startups of 2025

Mar 6, 2025

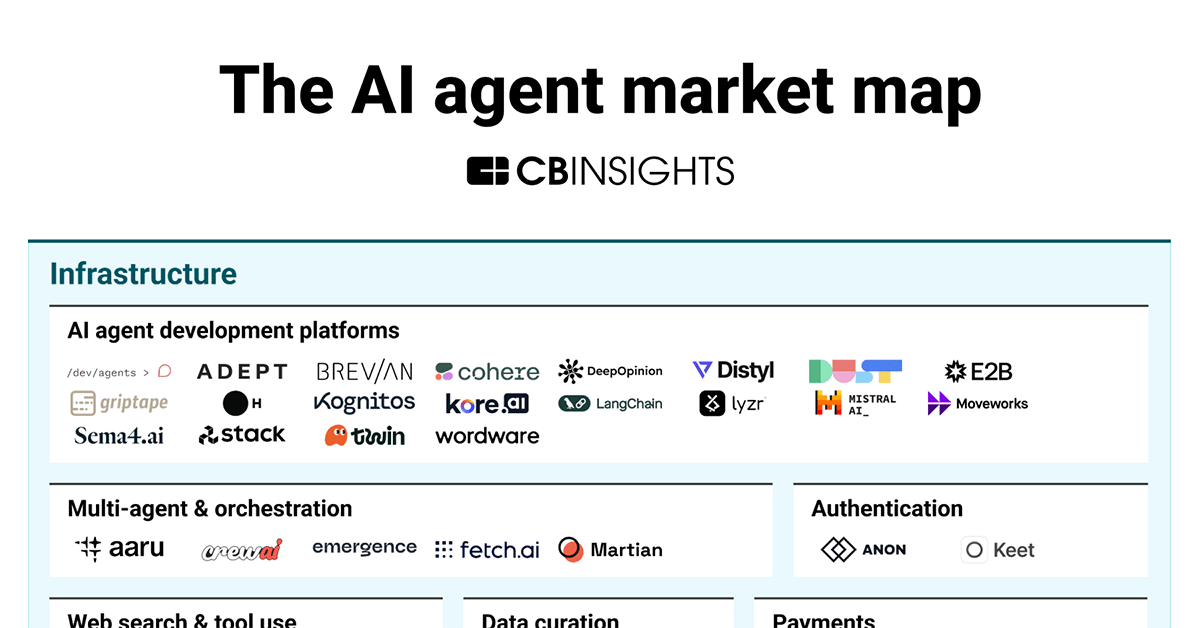

The AI agent market map

Oct 13, 2023

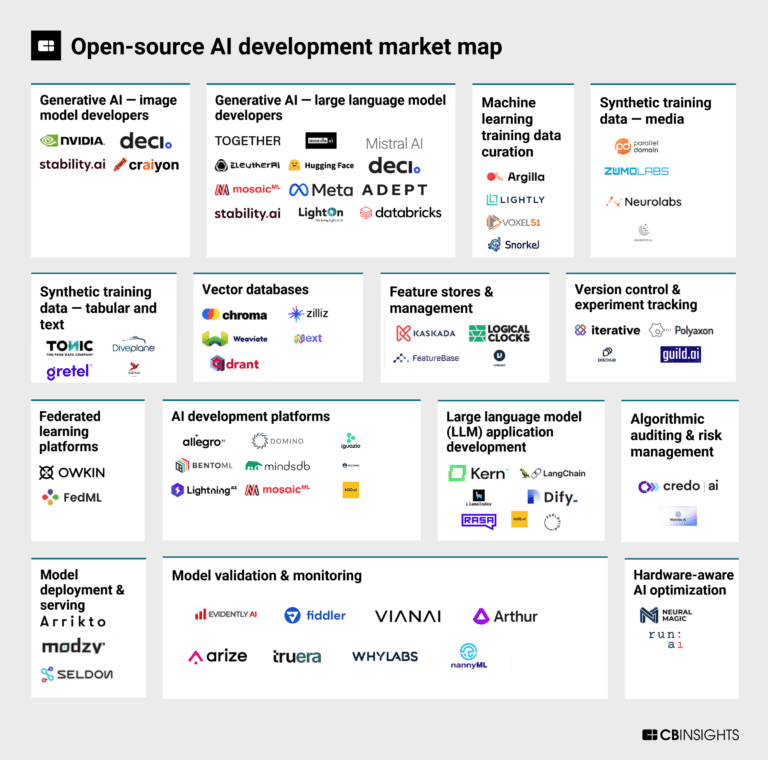

The open-source AI development market mapExpert Collections containing LangChain

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

LangChain is included in 7 Expert Collections, including AI 100 (All Winners 2018-2025).

AI 100 (All Winners 2018-2025)

200 items

Generative AI 50

50 items

CB Insights' list of the 50 most promising private generative AI companies across the globe.

Generative AI

2,314 items

Companies working on generative AI applications and infrastructure.

Artificial Intelligence

10,047 items

AI Agents & Copilots Market Map (August 2024)

322 items

Corresponds to the Enterprise AI Agents & Copilots Market Map: https://app.cbinsights.com/research/enterprise-ai-agents-copilots-market-map/

AI agents

374 items

Companies developing AI agent applications and agent-specific infrastructure. Includes pure-play emerging agent startups as well as companies building agent offerings with varying levels of autonomy. Not exhaustive.

Latest LangChain News

Jul 2, 2025

By iCopify 8 minute read Leveraging multiple AI models for better content ideation transforms the creative process from a single-source limitation into a powerhouse of diverse perspectives and specialised capabilities. While individual AI tools excel in specific areas, they often fall short of delivering the comprehensive, nuanced content that today’s audiences demand. By combining specialised models, content creators can unlock richer idea generation, enhanced accuracy, and more engaging final outputs that resonate across different platforms and audiences Why a multi‑model approach beats one-tool creativity Different AI models bring unique strengths to the creative table—some excel at narrative storytelling, others at analytical precision, and still others at visual conceptualisation. This diversity creates opportunities for cross-pollination of ideas that single-model approaches simply cannot match. The synergy between models produces content that is simultaneously creative, accurate, and tonally appropriate for specific audiences. Complementary strengths of diverse AIs Each AI model has been trained with different objectives and datasets, creating distinct specialised capabilities that complement each other perfectly. GPT models excel at narrative flow and conversational tone, making them ideal for initial brainstorming and creative exploration. Claude brings sophisticated nuance and analytical depth, perfect for refining ideas and ensuring logical consistency. Meanwhile, models like Gemini offer multimodal capabilities that can generate and interpret visual concepts alongside text. AI Model Enhanced reliability through cross‑validation The MIT CSAIL multi-agent debate concept demonstrates how multiple AI perspectives can significantly improve output quality through collaborative refinement. When different models evaluate and challenge each other’s outputs, the result is more accurate, less biased, and more comprehensive content. This cross-validation approach offers several key benefits: Reduced hallucinations through fact-checking between models Enhanced creativity through diverse perspective integration Improved accuracy via multiple verification layers Broader perspective incorporating different training approaches Quality assurance through automated peer review processes Workflow: Combining models step by step A structured multi-model ideation pipeline transforms chaotic brainstorming into a systematic process that maximises each model’s strengths while minimising individual weaknesses. This approach ensures comprehensive coverage of creative, analytical, and practical considerations throughout the content development process. Step 1 – Brainstorm with a generative model Begin your ideation process with a highly creative model like GPT-4, which excels at generating diverse, unexpected ideas without immediate constraints. This initial phase should focus on quantity over quality, allowing for maximum creative exploration before refinement begins. Effective brainstorming prompts include: “What are the most controversial yet valid perspectives on [subject]?” “Create content ideas that combine [topic] with trending themes in [industry]” “Develop story frameworks that make [complex topic] relatable to [target audience]” “Brainstorm content formats that could make [boring topic] engaging and shareable” Step 2 – Refine and polish with a second model After generating raw creative material, employ Claude’s analytical capabilities to refine structure, improve logical flow, and enhance the overall coherence of ideas. This refinement phase transforms creative chaos into structured, actionable content concepts. Evaluate logical consistency across all generated ideas Identify gaps in reasoning or supporting evidence Restructure content for optimal flow and readability Enhance clarity by simplifying complex concepts Optimise tone for target audience engagement Fact-check claims and verify statistical accuracy Strengthen conclusions with compelling calls-to-action Step 3 – Visualise concepts with image or video models Complete the ideation process by incorporating visual elements that enhance understanding and engagement. Modern AI image and video generation tools can create compelling visual concepts that support and amplify your refined content ideas. Visual formats to consider: Illustration styles for abstract or complex topics Video storyboards for dynamic content formats Interactive elements for engagement-focused pieces Brand-consistent graphics for social media adaptation Tools that simplify model orchestration Orchestration platforms eliminate the technical complexity of managing multiple AI models, allowing content creators to focus on creative strategy rather than technical implementation. These tools provide unified interfaces that streamline the multi-model workflow while maintaining the benefits of specialised AI capabilities. Platforms for multi‑AI integration Several platforms have emerged to simplify multi-model coordination, each offering different approaches to AI orchestration and workflow management. These tools reduce the friction associated with switching between different AI interfaces while maintaining access to each model’s unique strengths. LangChain – A Comprehensive framework for building multi-model applications Perplexity AI – Real-time research with multiple model integration IBM watsonx – Enterprise-grade AI orchestration and management Zapier AI – Workflow automation connecting various AI services Microsoft Copilot – Integrated productivity suite with multiple AI capabilities Jadve GPT Chat represents a breakthrough in unified AI interfaces, connecting multiple AI models through a single, streamlined platform. This tool eliminates the need to manage separate subscriptions and interfaces while providing access to the specialised capabilities of different AI models. Users can seamlessly transition between models within a single conversation, maintaining context while leveraging each model’s unique strengths. Use cases: From ideation to execution Real-world content workflows demonstrate the practical value of multi-model approaches across various content types and industries. These applications show how different models can contribute to different stages of the content creation process, from initial concept to final publication. Blog post & article planning Multi-model approaches revolutionise long-form content creation by dividing the process into specialised phases that leverage each model’s strengths. This systematic approach ensures comprehensive coverage while maintaining high quality throughout the development process. Efficiency gains include: Enhanced research depth via specialised fact-checking models Improved engagement through diverse perspective integration Reduced revision cycles due to front-loaded quality control Better SEO optimisation through keyword and structure analysis Social campaign ideation Multi-platform social campaigns benefit enormously from diverse AI perspectives, with different models contributing to hooks, visuals, and audience-specific adaptations. This approach ensures consistent messaging while optimising for platform-specific engagement patterns. Content Type Human review and feedback cycles Human oversight remains crucial for ensuring that AI-generated content meets brand standards and audience expectations. Systematic review processes help identify model strengths and weaknesses while maintaining quality control. Establish review checkpoints at each workflow stage Create standardised evaluation criteria for consistent assessment Document model performance patterns for future optimisation Implement feedback loops to improve prompt strategies Maintain quality thresholds across all content types Overcoming challenges in multi‑AI ideation Integration challenges and cost considerations require careful planning and a strategic approach to multi-model implementation. Understanding these obstacles helps creators develop sustainable workflows that maximise benefits while managing resources effectively. Managing costs & API limits Budget management becomes more complex with multiple AI subscriptions, but strategic usage patterns can optimise costs while maintaining access to specialised capabilities. Cost optimisation strategies: Batch processing to maximise API efficiency Free tier utilisation for initial brainstorming phases Usage monitoring to identify most cost-effective model combinations Subscription optimisation based on actual usage patterns Ensuring consistency in tone & style Standardisation challenges arise when different models have varying approaches to tone and style. Consistent prompting strategies and style guides help maintain brand voice across all AI-generated content. Consistency maintenance tips: Create template prompts that reinforce brand voice Establish quality checkpoints throughout the workflow Use consistent terminology across all model interactions Regular calibration of model outputs against brand standards Best practices for cross‑AI ideation workflows Practical guidelines emerge from successful multi-model implementations, providing a framework for creators looking to optimise their AI-assisted content creation processes. These practices ensure maximum benefit while minimising common pitfalls. Maintain a clear prompting strategy Structured multi-step prompts are essential for maintaining consistency and quality across different AI models. Each model requires specific prompting approaches that align with its strengths and limitations. Key prompt elements: Specific instructions tailored to each model’s capabilities Output format specifications for consistent deliverables Quality criteria that define acceptable standards Iteration instructions for refinement processes Evaluate outputs across models A/B testing model outputs provide valuable insights into which models perform best for specific content types and audiences. This systematic evaluation improves decision-making and workflow optimisation. Establish clear evaluation criteria before testing begins Create comparable prompts across different models Measure performance metrics consistently Iterate based on results to improve effectiveness Conclusion The strategic combination of multiple AI models creates unprecedented opportunities for enhanced content ideation that surpasses the limitations of single-tool approaches. By leveraging the complementary strengths of different AI systems, content creators can achieve higher quality, greater efficiency, and more engaging outputs that resonate with diverse audiences.

LangChain Frequently Asked Questions (FAQ)

When was LangChain founded?

LangChain was founded in 2022.

Where is LangChain's headquarters?

LangChain's headquarters is located at 42 Decatur Street, San Francisco.

What is LangChain's latest funding round?

LangChain's latest funding round is Series A.

How much did LangChain raise?

LangChain raised a total of $35M.

Who are the investors of LangChain?

Investors of LangChain include Sequoia Capital, Benchmark, Lux Capital, Abstract and Amplify Partners.

Who are LangChain's competitors?

Competitors of LangChain include Lyzr, LlamaIndex, LightOn, CrewAI, OctoAI and 7 more.

Loading...

Compare LangChain to Competitors

Dify operates as a platform for developing generative artificial intelligence (AI) applications within the technology industry. The company provides tools for creating, orchestrating, and managing artificial intelligence (AI) workflows and agents, using large language models (LLMs) for various applications. Dify's services are designed for developers who wish to integrate artificial intelligence (AI) into products through visual design, prompt refinement, and enterprise operations. It was founded in 2023 and is based in San Francisco, California.

Fireworks AI specializes in generative artificial intelligence platform services, focusing on inference and model fine-tuning within the artificial intelligence sector. The company offers an inference engine for building production-ready AI systems and provides a serverless deployment model for generative AI applications. It serves AI startups, digital-native companies, and Fortune 500 enterprises with its AI services. It was founded in 2022 and is based in Redwood City, California.

LlamaIndex specializes in building artificial intelligence knowledge assistants. The company provides a framework and cloud services for developing context-augmented AI agents, which can parse complex documents, configure retrieval-augmented generation (RAG) pipelines, and integrate with various data sources. Its solutions apply to sectors such as finance, manufacturing, and information technology by offering tools for deploying AI agents and managing knowledge. LlamaIndex was formerly known as GPT Index. It was founded in 2023 and is based in Mountain View, California.

Cohere is an enterprise artificial intelligence (AI) company building foundation models and AI products across various sectors. The company offers a platform that provides multilingual models, retrieval systems, and agents to address business problems while ensuring data security and privacy. Cohere serves financial services, healthcare, manufacturing, energy, and the public sector. It was founded in 2019 and is based in Toronto, Canada.

Lamini develops large language models (LLMs) for the enterprise sector. Its offerings include a platform for developing and fine-tuning LLMs to address hallucinations, as well as tools for creating text-to-SQL agents, automating classification tasks, and integrating function calling with external tools and Application Programming Interface (API). Lamini serves sectors that require accuracy and security in LLM applications, including Fortune 500 companies and artificial intelligence (AI) startups. It was founded in 2022 and is based in Menlon Park, California.

CrewAI develops technology related to multi-agent automation within the artificial intelligence sector. The company provides a platform for building, deploying, and managing AI agents that automate workflows across various industries. Its services include tools, templates for development, and tracking and optimization of AI agent performance. The company was founded in 2024 and is based in Middletown, Delaware.

Loading...