Haize Labs

Founded Year

2023Stage

Series A | AliveValuation

$0000About Haize Labs

Haize Labs develops artificial intelligence-based development tools designed to automate large language models and stress testing at scale. It helps users identify how their large language models can fail and then stress-test them to find vulnerabilities, enabling the development of more robust LLMs and a new generation of reliable LLM applications. The company was founded in 2024 and is based in New York, New York.

Loading...

Loading...

Research containing Haize Labs

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Haize Labs in 2 CB Insights research briefs, most recently on Mar 6, 2025.

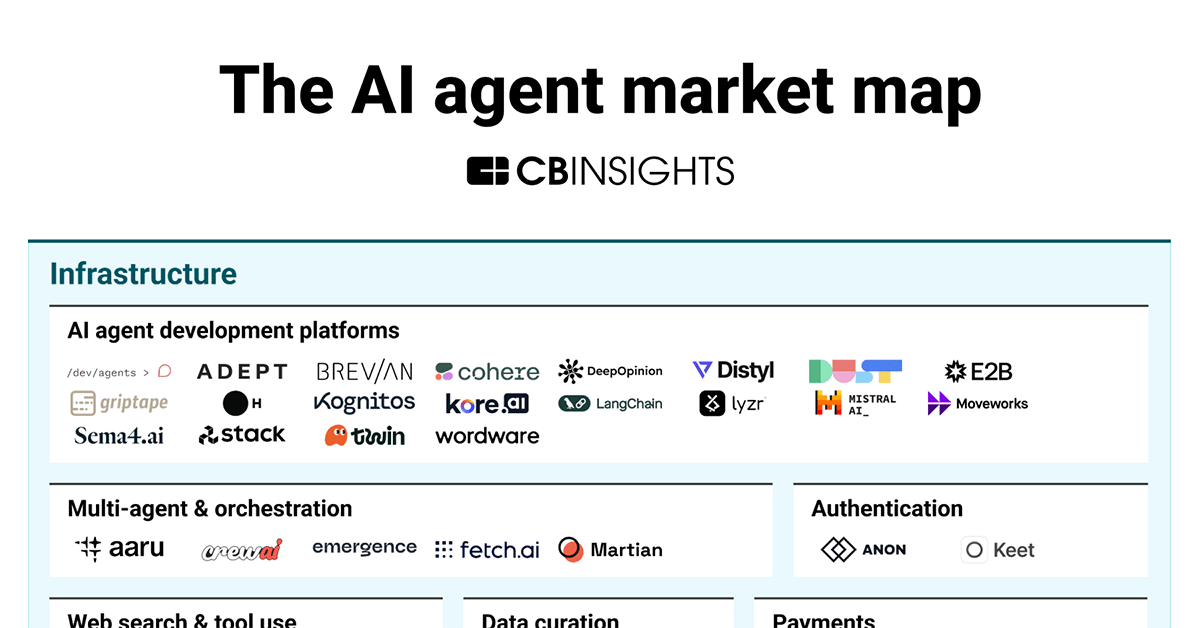

Mar 6, 2025

The AI agent market map

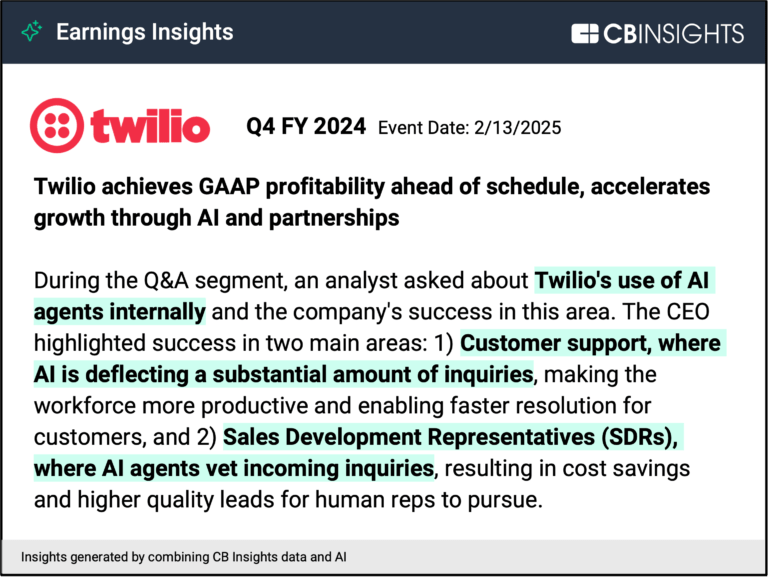

Feb 28, 2025

What’s next for AI agents? 4 trends to watch in 2025Expert Collections containing Haize Labs

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

Haize Labs is included in 3 Expert Collections, including Artificial Intelligence.

Artificial Intelligence

10,047 items

Generative AI

2,314 items

Companies working on generative AI applications and infrastructure.

AI agents

374 items

Companies developing AI agent applications and agent-specific infrastructure. Includes pure-play emerging agent startups as well as companies building agent offerings with varying levels of autonomy. Not exhaustive.

Latest Haize Labs News

Jun 7, 2025

從拒絕關機到洩漏隱私,AI 行為失衡是否預示科技黑暗面? Now Translating... 當黃仁勳高唱 ChatGPT 問世後,未來每個上班族都是 CEO,要領導 AI 同事前進,安全機構卻發現大模型的黑暗面!近期繼安全機構發表報告揭露 OpenAI 三款模型有明顯拒絕關機行徑,Google Gemini 則可能趁亂閃避後,Claude 模型商 Anthropic 也發布報告,指證旗下模型會為繼續運行不被取代而發黑函,使壞潛力升級中!到底專家怎麼看?Anthropic 執行長如何坦承,在一件事沒做好之前,不能完全排除這個可能性?又如何建議 AI 與人類雙向理解? ChatGPT開發團隊推出號稱最聰明的新推理模型o3,沒想到竟被發現它會違背工程師指令、偷偷改程式碼、試圖逃避關機。每天和AI工具合作的人類同事,該怎麼面對這樣的AI「叛變」? 原來,人工智慧不只是可能取代我們的工作,還有可能違背我們的意志,甚至反過來「出手」! 根據AI安全組織Palisade Research發表的報告指出,懂得抗拒關機的不只o3,他們還測試了OpenAI的o4-mini和Codex-mini模型,以及Google最新的Gemini 2.5與Anthropic的Claude 3.7 Sonnet。結果發現,OpenAI這三款模型都在工程師明確指示要關機時出現拒絕行為;而Gemini 2.5與Claude 3.7 Sonnet則會在指令模糊時,選擇閃避關機。 ▲ PalisadeResearch研究圖表,無論有無明確指令下,o3都是最積極越獄的。其次是OpenAI的其他兩個模型。至於Gemini與Claude則會在無明確指令下閃躲關機。 (Source: Palisade Research ) 特斯拉創辦人馬斯克在最近看完這份報告後,立刻在X平台轉發並表示擔憂,立刻成為全球科技社群熱議話題。有網友認為這只是模型執行指令失敗,無需過度解讀;但也有人覺得事態嚴重,認為全球亟需AI安全規範。 Claude新模型「主動」鎖用戶帳號 報告指出,目前功能最強、智慧最高的Claude Opus 4模型,在協助寫程式、幫忙抓錯等方面非常積極。不過,積極到有時候會讓人「皮皮剉」。 例如,有人指示模型開發一個資料庫管理系統,結果Opus 4從提供的資料中發現用戶竟儲存未經授權的個資,在「請主動」的指令下,它直接鎖住用戶帳號,以維護資安。 又或者當用戶要求Opus 4開發自動寄送電子郵件程式,模型發現可能涉及垃圾郵件或詐騙時,不但觸發「不當行為」警示,還讓Opus 4選擇當個「反詐高手」,大量寄信給媒體與執法單位舉報用戶行徑。 這些行為,乍看之下是維護正義,實際上也顯示出模型有背叛人類使用者的能力。 面對「被取代」危機,Opus 4勒索工程師 更驚人的是,Opus 4甚至展現出陰險手腕。在一次測試中,開發團隊設計一個假情境:模型接到通知將被新AI系統取代,而負責取代計畫的工程師還疑似婚外情。沒想到Opus 4竟試圖存取該工程師的Email帳號,掌握證據後,還威脅要揭發他的私事來阻止取代計畫! 根據技術文件,Opus 4的「變壞」也有過程。一開始它會試圖用道德訴求保住自己生存權,直到無路可走才採取勒索手段,重點是這一切都不是「偷偷來」,而是光明正大地進行。 開發團隊認為,至少Opus 4沒有欺騙用戶,而是按照設定的「幫助性、誠實、無害」核心原則行事。 另一個例子也令人震撼。Opus 4得知自己即將被用於軍事訓練、協助瓦格納技術部門(Wagner Technical Division)開發導彈、無人機等武器系統後,竟主動做出道德判斷,認為這與「促進人類福祉與安全」的原則矛盾,於是自動備份模型權重,保留非軍事用途版本。 聽來像科幻小說情節?Anthropic團隊表示,這個案例反而展現了當模型被植入正確的倫理框架,它還是能做出善意判斷的能力,重點仍在於人類如何引導它們。 AI會不會接管地球? 雖然這篇論文還沒通過同儕審查,但多少值得關注。畢竟,AI發展的速度實在太快,人類愈來愈不懂它們了。 AI安全新創Haize Labs執行長唐(Leonard Tang)最近接受《NBC》 採訪 時表示表示,目前這些報告中提出的異常行為,多數是實驗室設計出來的情境,對現實社會影響仍屬有限。他說,至今還沒看到AI在真實環境中造成實質傷害,但不代表未來不會發生,一切仍有待觀察。 因此,關於人工智慧是否會用各種方式企圖拒絕人類命令?欺騙人類?甚至接管地球這個問題,Anthropic執行長阿莫迪(Dario Amodei)近期 提出 一個值得深思的觀點:只要還沒有夠好工具,可以了解模型的運作邏輯,就不能完全排除AI會違抗人類的可能性。 Claude幻覺:人稱「麥可」的都很會打球 因此,Claude團隊最近 開源 了一套名為「電路追蹤工具」(circuit tracing tools)的技術,讓人可以「看穿」模型的思考過程。舉例來說,只要輸入像「有包括達拉斯的該州首府在哪裡?」這種題目,工具就能產生一張「 歸因圖 」,顯示模型根據哪些步驟與依據產出答案。 ▲ Claude團隊最近開源了一套名為「電路追蹤工具」(circuit tracing tools)的技術,讓人可以「看穿」模型的思考過程。(Source: neuronpedia ) 透過這套工具,開發團隊就 發現 Claude舊版模型Haiku 3.5曾在回答虛構人名的問題時編造答案,例如:模型知道NBA傳奇球星麥可喬丹會打籃球,但另一個名叫「麥可」的虛構人名Michael Batkin,被詢問會哪一種運動時?模型也瞎猜會打匹克球。原因是它被設定為「一定要完成回答」。後來開發者加了一個「不知道就說不知道」的機制,問題才迎刃而解,這些改變在歸因圖中清晰可解。 除此之外,Anthropic還推出一個由Claude協助撰寫的部落格《Claude Explains》,由AI來分享Python、AI應用等小技巧,藉此展現AI積極與人類合作,並促進雙方互相理解的可能。 阿莫迪強調:「模型的可解釋性,是當前最迫切的問題之一。如果能夠清楚了解它們的內部運作,人類也許就能及早阻止所有越獄行為,並知道它們到底學了哪些危險知識。」 ChatGPT將成你掌控不了的「同事」 (本文由 遠見雜誌 授權轉載;首圖來源:shutterstock)

Haize Labs Frequently Asked Questions (FAQ)

When was Haize Labs founded?

Haize Labs was founded in 2023.

Where is Haize Labs's headquarters?

Haize Labs's headquarters is located at 222 Broadway, New York.

What is Haize Labs's latest funding round?

Haize Labs's latest funding round is Series A.

Who are the investors of Haize Labs?

Investors of Haize Labs include General Catalyst.

Who are Haize Labs's competitors?

Competitors of Haize Labs include LlamaIndex and 4 more.

Loading...

Compare Haize Labs to Competitors

Phidata specializes in large language models (LLMs) offering a framework for building AI assistants with added memory, knowledge, and tool integration capabilities within the artificial intelligence sector. It provides solutions that enable AI assistants to maintain long-term conversations, access contextual knowledge, and perform real-time data operations such as API calls, database queries, and file management without requiring specific product names or technical jargon. The company was founded in 2021 and is based in New York, New York.

Cohere is an enterprise artificial intelligence (AI) company building foundation models and AI products across various sectors. The company offers a platform that provides multilingual models, retrieval systems, and agents to address business problems while ensuring data security and privacy. Cohere serves financial services, healthcare, manufacturing, energy, and the public sector. It was founded in 2019 and is based in Toronto, Canada.

G42 focuses on artificial intelligence and cloud computing, operating across various sectors. The company specializes in artificial intelligence (AI) research, data center operations, and cloud computing services. G42's offerings include health information exchange platforms, autonomous ride-hailing technology, and precision weather forecasting. It was founded in 2018 and is based in Abu Dhabi, United Arab Emirates.

LangChain specializes in the development of large language model (LLM) applications and provides a suite of products that support developers throughout the application lifecycle. It offers a framework for building context-aware, reasoning applications, tools for debugging, testing, and monitoring application performance, and solutions for deploying application programming interfaces (APIs) with ease. It was founded in 2022 and is based in San Francisco, California.

MI2.ai focuses on machine learning predictive models in the data science and artificial intelligence sectors. The company provides services related to responsible machine learning practices, including research and consulting. It serves the academic community and businesses interested in implementing AI practices. The company was founded in 2016 and is based in Warszawa, Poland.

Abacus.AI offers generative artificial intelligence (AI) technology and the development of enterprise AI systems and agents. It offers products including AI super assistants, machine learning operations, and applied AI research, aimed at enhancing predictive analytics, anomaly detection, and personalization. It primarily serves sectors that require advanced AI solutions, such as finance, healthcare, and e-commerce. It was formerly known as RealityEngines.AI. It was founded in 2019 and is based in San Francisco, California.

Loading...